Planned Missingness Data Designs for Human Ratings in Creativity Research A Practical Guide

Image credit: Authors

Image credit: Authors

Abstract

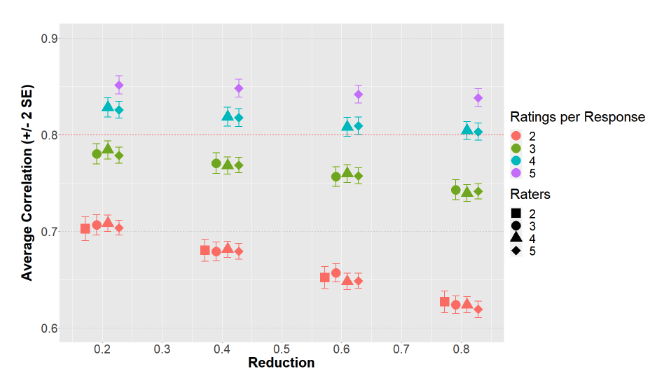

Human ratings are ubiquitous in creativity research. Yet, the process of rating responses to creativity tasks – typically several hundred or thousands of responses, per rater – is often time-consuming and expensive. Planned missing data designs, where raters only rate a subset of the total number of responses, have been recently proposed as one possible solution to decrease overall rating time and monetary costs. However, researchers also need ratings that adhere to psychometric standards, such as a certain degree of reliability, and psychometric work with planned missing designs is currently lacking in the literature. In this work, we introduce how judge response theory and simulations can be used to fine-tune planning of missing data designs. We provide open code for the community and illustrate our proposed approach by a cost-effectiveness calculation based on a realistic example. We clearly show that fine-tuning helps to save time (to perform the ratings) and monetary costs, while simultaneously targeting expected levels of reliability.