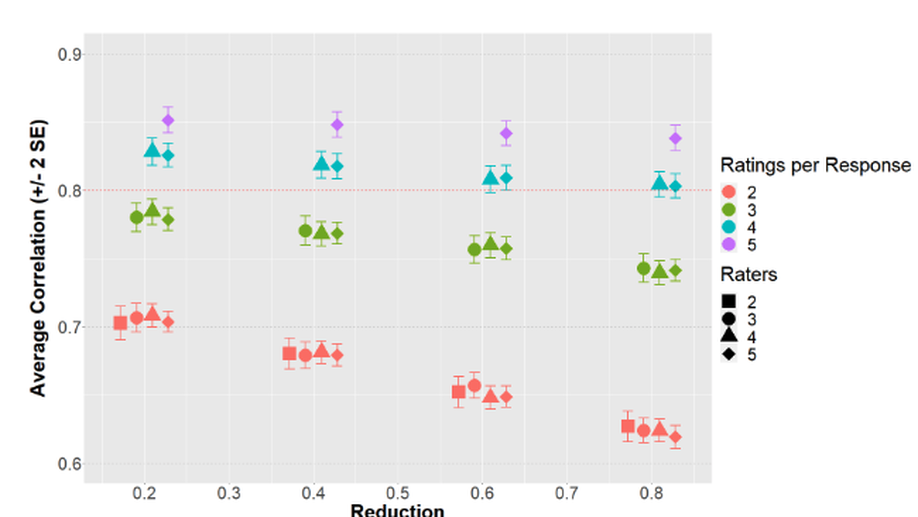

Planned Missingness Data Designs for Human Ratings in Creativity Research A Practical Guide

Creativity research relies heavily on human ratings, often a laborious and costly task due to the volume of responses per rater. Planned missing data designs offer a potential solution by having raters assess only a subset of responses, yet ensuring ratings meet psychometric standards like reliability remains a challenge. Our work introduces how judge response theory and simulations can optimize these designs, providing open-source code and demonstrating through a practical example that this fine-tuning balances time and cost savings with expected rating reliability.

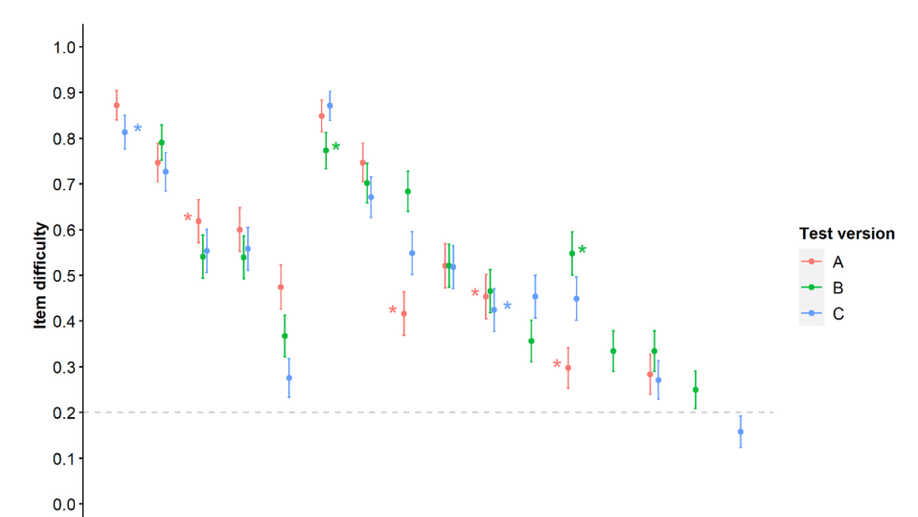

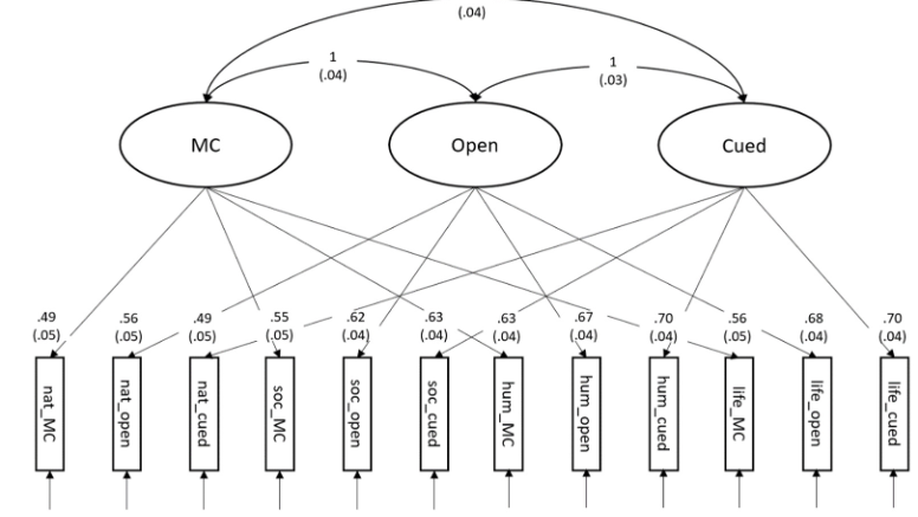

Stop Worrying about Multiple-Choice":" Fact Knowledge Does Not Change with Response Format

This paper discusses the differences between multiple-choice and open-ended formats for measuring crystallized intelligence or declarative fact knowledge, respectively. It has been suggested that multiple-choice formats only require recognizing the correct response, whereas open-ended formats require cognitive processes such as searching, retrieving, and actively deciding on a response from long-term memory. Two online studies were conducted to test these assumptions, and the results showed that item difficulty increases in the open-ended methods, but the method of inquiry does not affect what is measured with different response formats. This suggests that crystallized intelligence does not change as a function of the response format.

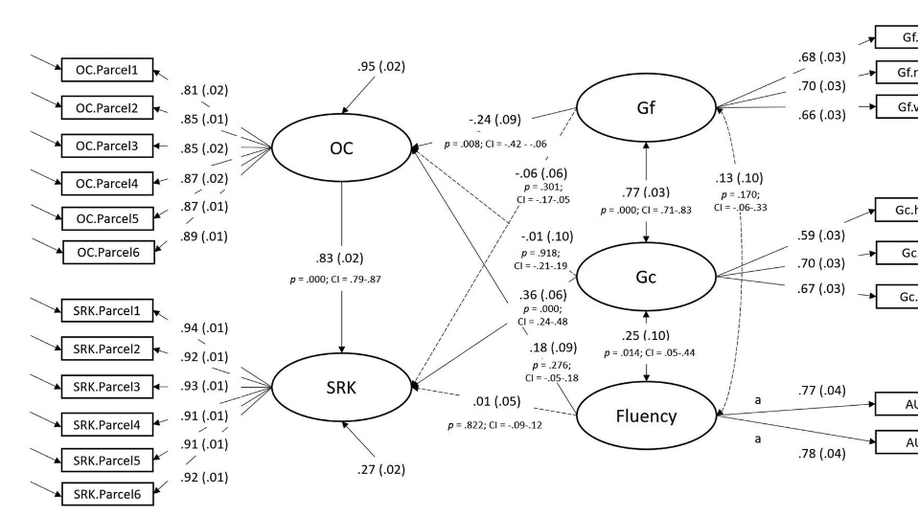

The Nomological Net of Knowledge, Self-Reported Knowledge, and Overclaiming in Children

This research aims to close the gap in knowledge of self-reported knowledge and overclaiming in children. A questionnaire was developed to measure this in a sample of 897 third grade children. Three perspectives were discussed":" overclaiming as a result of deliberate self-enhancement, a proxy for declarative knowledge, and an indicator of creative engagement. The results of the study showed that individual differences in self-reported knowledge were strongly inflated by overclaiming, and only weakly related to declarative knowledge, similar to findings in adult samples.

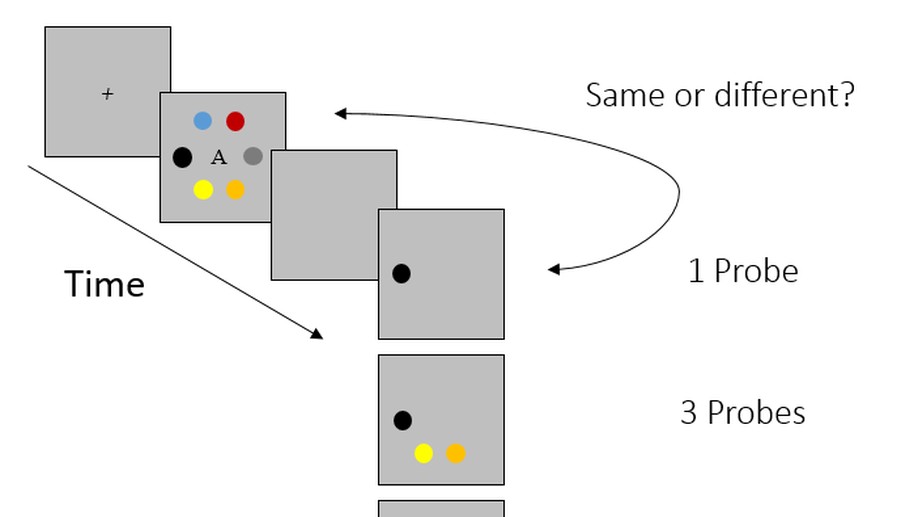

Is long-term memory used in a visuo-spatial change-detection paradigm?

We investigated why several attempts to demonstrate the Hebb repetition effect with arrays of simple visual stimuli in a classical change-detection context have failed. We manipulated long-term memory representations of three six-color target arrays and compared the performance on these target arrays in a change-detection test with the performance on randomly generated arrays.

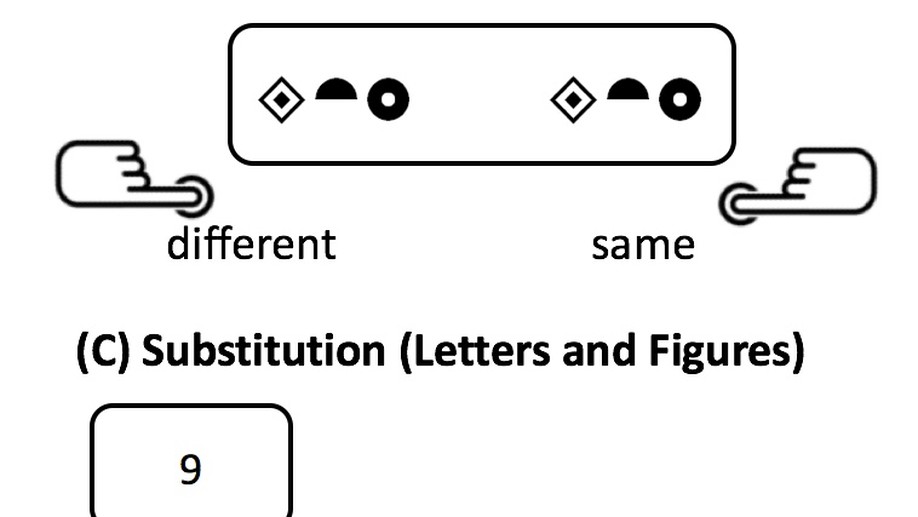

Binding Costs in Processing Efficiency as Determinants of Cognitive Ability

The aim of this study was to replicate and extend previous research addressing the task complexity moderation of the relationship of mental speed with cognitive ability. Using a well-defined theoretical binding framework, we intended to offer a more meaningful account of “task complexity”. We conclude that binding requirements and, therefore, demands on working memory capacity offer a satisfactory account of task complexity that accounts for a large portion of individual differences in ability.

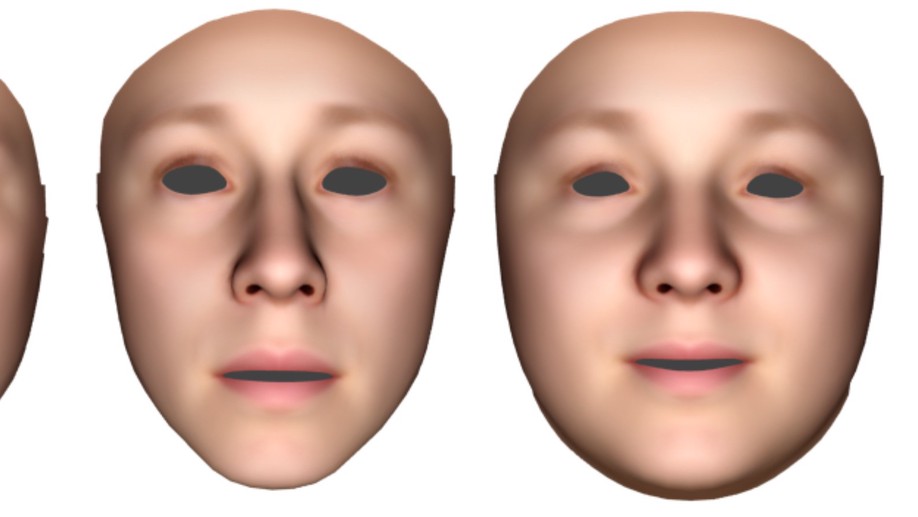

Symmetric or not? A holistic approach to the measurement of fluctuating asymmetry from facial photographs.

We evaluate two traditional scoring methods, the Horizontal Angular Asymmetry (HAA) and the Horizontal Fluctuating Asymmetry (HFA), and propose a geometric morphometrics-based Holistic Facial Asymmetry Score (HFAS).

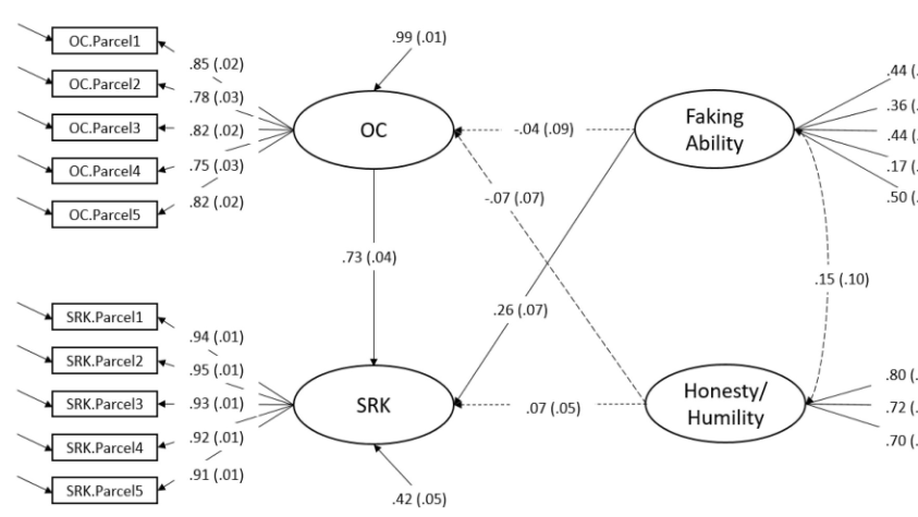

Testing Competing Claims About Overclaiming

We discuss four different perspectives on the phenomenon of overclaiming that have been proposed in the research literature; Overclaiming as a result of a) self-enhancement tendencies, b) as a cognitive bias (e.g., hindsight bias, memory bias), c) as proxy for cognitive abilities, and d) as sign of creative engagement. Moreover, we discuss two different scoring methods for an OCQ (signal detection theory vs. familiarity ratings).